Podcast: Play in new window | Download

Subscribe: Apple Podcasts | Spotify | TuneIn | RSS

We continue our dive into Rob Conery’s The Imposter’s Handbook as Allen is Allen, Joe is Michael, Michael is Joe.

Using you podcast player to read these notes? View this episode’s full show notes and participate in the discussion at https://www.codingblocks.net/episode88.

Sponsors

- Subscribe and listen to the Techmeme Ride Home podcast

- Listen via iTunes

- Listen via Google Play

- Datadog.com/codingblocks – Sign up today for a free 14 day trial and get a free Datadog t-shirt after creating your first dashboard.

Survey Says

News

- Thank you to everyone that took time out of their busy day to leave us a review.

- iTunes: LegoParsons, Dennis Adolfi, gerbacca, PS2178, TEKSystems

- Stitcher: ChocolateIT, timmyNicePen

- Pick up your copy of The PowerShell Conference Book by Mike F Robbins, Michael T Lombardi, and Jeff Hicks (Leanpub).

Algorithmic Complexity

- Algorithmic Complexity is the study of how well algorithms scale – both in time and space.

- It can be used to compare different algorithms to see which perform better, and may also help establish performance expectations for different size data.

- Big O is the mathematical notation that we use is used to formally describe the limiting behavior of a function.

- “function” vs “algorithm” – Big O isn’t just for algorithms, can be used to study purely mathematical functions so often you’ll see the word “function” used in place of algorithm when reading about big O,

- Limiting behavior: essentially it means boiling down your analysis to the most significant bits,

- In practice, it means that big O ignores certain data points, like constants, that aren’t significant to the growth in the long run,

- Practical example of the “limiting behavior” (aka Asymptotic Analysis):

- If you loop through an array of string and perform 4 actions (like, convert the string to a decimal, multiply the number by 100, convert to an int, convert to a string) then your algorithm performs 4 * the size of your array operations.

- An array with 100 objects in it will perform 400 operations.

- Big O doesn’t worry about the constant, because the number of operations (4 in this case) doesn’t affect the growth curve – essentially means that the constants don’t matter as input size grows.

- That means that an algorithm that loops through an array and does 4 operations will have the same big O as an algorithm that does 10,000.

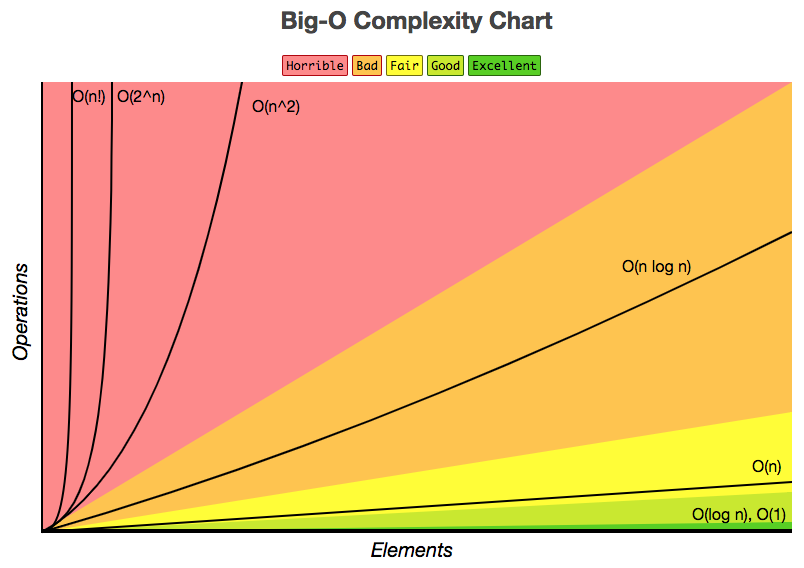

Big O

- Big O is measure of how well your code “scales”.

- “Scales” is an overloaded term now, in this sense you can think of it as how well the algorithm performs on a single CPU or in memory.

- Big O is all about comparing algorithms, and predicting their performance.

- Created by Paul Bachmann, Edmund Landau, and others.

- Bachmann, originally began to lay the groundwork for big O back in 1894.

- Over the years, others contributed to what we now know as big O starting with Landau in 1909.

- Also called Bachmann-Landau notation or asymptotic notation.

- The O stands for “order of the function”, although originally it was just “order of”.

- Big O is generally recognized as the Upper Bound, or worst case scenario, for an algorithm – but it’s not strictly limited to that. We don’t go deep into this but it’s good to know that you can also describe things like the best run time, or average run time with big O notation and other symbols (theta, little o, et al).

O(1)

- O(1) are constant – operations aren’t affected by the input size, over time they take roughly the same amount of resources to run.

- For example, if an O(1) operation takes 1 second to run with an input size of 1, then it should still take about 1 second to run with an input size of 1 thousand.

- An example of an O(1) operation would be to directly access an element of an array, like arr[0], for example.

- This is green on the Big-O Cheat Sheet.

O(n)

- Order n operations take more time to run for larger inputs, but the growth rate is linear, or proportionate to the input size.

- For example, if an O(n) algorithm takes 1 second to run for an input size of 1, then we would expect an input size of 1,000 to run in 1,000 seconds.

- An example of an O(n) operation would be an algorithm that requires access to each element of an array.

- If we were trying to find the largest number in the array, assuming the array had random numbers and wasn’t sorted, we’d have to visit each element of the array.

- This type of operation is called linear, meaning, as the size of n grows, so does the number of operations.

- This is yellow on the Big-O Cheat Sheet.

O(n^2)

- In O(1), you don’t have to look at all of your input. O(n) you look at it (at most) once. But for O(n^2) (worst case) you look at each input once for each input!

- O(n) operation happens when we iterate over our array inside of a loop where we’re iterating over our array.

- Suppose, we want to take an array of 5 integers and make a new array that pairs each number with every other number like [{1,1}, {1,2}, …{5,4}, {5,5}]. One implementation might be to loop over the array in an outer loop and then loop over the same array in an inner loop to return the pair at the current outer & inner indexes.

- The outer loop takes n operations and the inner loop also takes n operations. Which is n * n or n squared (n^2).

- Given that implementation, an array of 5 elements would take 25 operations to produce the desired result.

- This type of operation would not be considered efficient.

- Anytime you see nested loops over the same list, array, etc, beware … it’s O(n^2).

- This is red on the Big-O Cheat Sheet.

- Seems like a lot of interview problems will have an obvious O(n^2) solution that can be optimized to run much faster.

- What about O(n^3)!?!? Yep, that’s a thing, and it’s worse just like you’d expect.

O(log n)

- O(log n) operations only look at a fraction of the input.

- A real world example is looking something up in a dictionary (remember those?).

- An example of O(log n) operations is when we’re able to keep dividing n in half

- “Keep” is an important word, if your algorithm only looks at half your input, then it still scales linearly, O(n)

- You have to keep dividing in half, over and over again, like looking up a word in the dictionary – flip to the middle, and then…

- Other real world examples include binary search algorithm, and git bisect.

- With O(log n), if n = 5, we can reduce our operations to 3.

- And if n = 100, we’d have only 7 operations!

- This is green on the Big-O Cheat Sheet.

O(n log n)

- This type of operation takes n * log n operations, frequently seen in divide and conquer type algorithms like merge sort.

- Consider a loop within a loop again over the same collection. But this time, the inner loop is doing a binary search, instead of iterating over the entire array

- We already know that the outer loop takes n operations.

- And we know that a binary search takes log n operations.

- So this example would be outer operations * inner operations or n * log n or just n log n.

- This is orange on the Big-O Cheat Sheet

- This is much more efficient than O(n^2)

O(2^n)

- “Algorithms with running time O(2^N) are often recursive algorithms that solve a problem of size N by recursively solving two smaller problems of size N-1.” (Matt Timmermans, Stack Overflow).

- Examples are tough, but essentially you are dealing with each input 2^(input size) times

- Imagine a loop that starts at 0, and stops at 2^n – you pass it an array with 5 elements and it does 32 operations, 10 elements and it does 1,024 operations.

- This is red on the Big-O Cheat Sheet.

O(n!)

- The worst big O we normally talk about.

- An example: list all permutations of an array is O(n!), and that’s because there are n! permutations of an array.

- This is red on the Big-O Cheat Sheet.

Resources We Like

- The Imposter’s Handbook (bigmachine.io)

- Everything is Fast for Small n (Coding Horror)

- Big-O Cheat Sheet

- Big O notation (Wikipedia)

- Algorithmic Complexity (Carnegie Mellon University)

- Examples of Algorithms which has O(1), O(n log n), and O(log n) complexities (Stack Overflow)

- Numberphile – Videos about numbers – it’s that simple. (YouTube)

- Example of Big O of 2^n (Stack Overflow)

- Recurrence relation (Wikipedia)

- Fibonacci number (Wikipedia)

- Why is Fibonacci Sequence Big O(2^n) instead of O(logn)? (Stack Overflow)

- Factorial (Wikipedia)

Tip of the Week

- Pythonista 3 – A full Python IDE for iOS (OMZ Software, iTunes)

- Thanks joe_recursion_joe!

- More ways to create fake data:

- dev.to – A great resource to find tech blog articles you might not have otherwise found, as well as a great place to contribute your blog articles to reach new readers you might not have otherwise found.